Audit

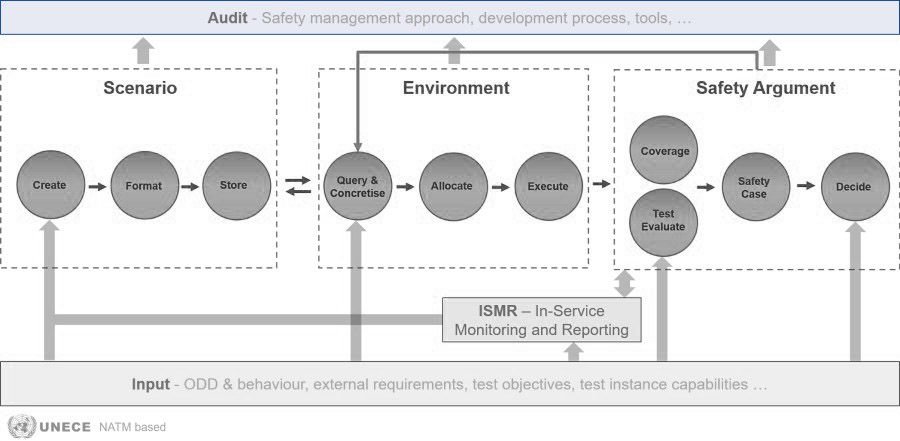

This section explains the steps to be taken to go through each part of the SUNRISE Safety Assurance Framework (SAF), including references to SUNRISE deliverable sections for further details (indicated by “D”). These steps can guide both the auditors and the appliers of the SAF. The stepwise approach applied in this section, caters the work of certifiers and regulators that normally use a process format more than a framework. In this context, “Auditors” are people that audit the application of the SUNRISE SAF (like certifiers or regulators), whereas “Appliers” are people that apply SUNRISE SAF to assess the safety of Cooperative Connected Automated Mobility (CCAM) systems (like vehicle manufacturers or their suppliers).

Some important remarks to take into account, related to the contents of this section:

- This section might be modified and will be expanded with additional steps, based on contents of deliverables that were not available yet at the time of writing this section.

- Auditing of CCAM safety assurance procedures (like the SUNRISE SAF), might involve aspects going beyond the steps described in this section, including (but not limited to) audits on the internal procedures for design, development, testing and overall safety management at CCAM technology developers (like vehicle manufacturer and their suppliers). Although not denying their possible importance, these aspects are not treated here due to scope limitations of the SUNRISE project.

For Audit instructions, see individual SAF block descriptions