Query & Concretise

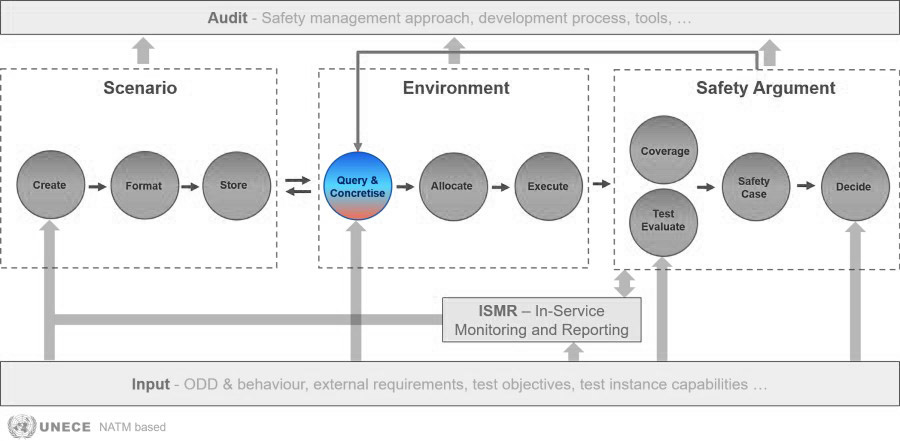

The Query & Concretise block takes input from the Input block such as ODD description, external requirements, CCAM system specifications or pass/fail criteria. It then passes relevant data extracted from these inputs to the SUNRISE Data Framework (DF) in the form of a query, in order to retrieve scenarios from the external scenario databases that connect to it. The scenarios returned from the SUNRISE DF to the Query & Concretise block, can be logical or concrete scenarios. This means that all parameters are defined using value ranges, allowing for an infinite number of concrete scenarios to be derived from logical scenarios. The next step is to concretise these parameter ranges into specific values and to combine these concrete scenarios with the test objectives. Once combined, the scenarios are then allocated to a specific test environment for execution.

The following overview provides a workflow of the Query & Concretise block:

- The information coming from the Input block will be used to query the external SCDBs via the SUNRISE DF. As a result of that query, logical or concrete scenarios will be returned. It is in this step that scenarios hosted within SCDBs become test scenarios, because they are associated with the intended testing purposes upon the retrieval via the SUNRISE DF.

- If the returned scenarios are logical scenarios (parameters are described in ranges), then…

- concrete scenarios with concrete parameter values will be derived from the logical scenarios.

- concrete test scenarios will be combined with the test objectives.

- If the returned scenarios are concrete scenarios (parameters are described in values), then:

- concrete test scenarios will be combined with the test objectives.

- The concrete test scenarios with their test objectives, will be send to the Allocate block for allocation to a test environment and subsequent execution.

- During or after execution, the Safety Argument block might feed back the test outcome to the Query & Concretise block, based on which the next set of concrete parameter combinations can be allocated and executed. An example of this iterative process could be the exploration of test results around a failure point or around the border of the ODD.

Logical or concrete scenarios can be retrieved from external databases connected to the SUNRISE Data Framework through queries. These queries are constructed using tags defined in the OpenLABEL format, and adhere to a harmonized ontology developed within the SUNRISE project. This approach ensures a unified understanding of all elements and their interrelationships across the connected databases.

To derive concrete scenarios from the logical ones, several sampling methodologies have been developed. These methodologies facilitate the discretisation of the continuous parameter space and enable the selection of specific samples with concrete parameter values within this space. These samples are chosen to estimate the distribution of a safety measure across the parameter space. Alternatively, these methodologies can be applied to optimize for other test objectives, such as identifying the pass/fail boundary within the parameter space.

SAF Application Guidelines for 'Query & Concretise'

In the list below, “D” stands for Deliverable. All deliverables of the SUNRISE project can be found here.

- Review the inputs to the Query & Concretise block, previous called COTSATO process (COncretsing Test Scenarios and Associating Test Objectives) (D3.2 Section 7.3):

- Verify that the ODD description is provided and follows the format guidelines in ISO 34503.

- Check that the system requirements are clearly defined.

- Ensure the CCAM system under test is properly specified.

- Confirm that variables to be measured during test execution are listed.

- Validate that pass/fail criteria for successful test execution are defined.

- Evaluate use of SUNRISE Data Framework (DF):

- Confirm proper connection to federated scenario databases through DF (D6.3 Section 2.3 and Annex 1).

- Verify authentication and authorisation mechanisms are properly configured for each scenario database (D6.2 Section 2.4, D6.3 Section 3.2.3).

- Verify if DF’s query capabilities work as intended across multiple connected scenario databases (D6.2 Section 2.2.2 and 3.1; D6.3 Section 2.3.1.3 and Annex 2).

- Validate semantic search functionality using harmonised ontologies (D6.2 Section 2.2.2; D5.2 Section 3.4).

- Confirm Automated Query Criteria Generation (AQCG) compatibility (D6.1 Section 3.2.3, D6.3 Section 3.2.5).

- Ensure scenario data exchange follows ASAM OpenSCENARIO, OpenDRIVE, and OpenLABEL standards (D6.2 Section 3.3).

- Validate input/output interface compliance with SUNRISE DF specifications (D6.2 Section 3.3).

- Confirm data format consistency across all connected scenario databases (D6.2 Section 3.3).

- Examine the query used to fetch scenarios from the scenario database(s):

- Verify Ontological Compliance (D5.2 Section 4 and 3.4.3):

- Ensure query uses the SUNRISE ontology structure.

- Confirm query operators are properly implemented (AND, OR, NOT, ..).

- Apply Standardised Data Format Requirements (D5.2 Section 4):

- Verify query compatibility with OpenLABEL JSON format for scenario tagging.

- Ensure query supports OpenSCENARIO and OpenDRIVE format requirements.

- Validate that query can handle both logical and concrete scenario representations.

- Verify Ontological Compliance (D5.2 Section 4 and 3.4.3):

- Evaluate quality of individual scenarios:

- Check the testing purpose metrics to ensure scenarios are relevant for the intended testing (D5.3 Section 3.1, 4.1, 5.1, 6.1).

- Verify scenario description quality including completeness and unambiguity (D5.3 Section 3.2, 4.2, 5.2, 6.2).

- Assess scenario exposure and probability to verify if scenarios represent realistic situations (D5.3 Section 3.3, 4.3, 5.3, 6.3).

- Assess diversity and similarity between scenarios to avoid redundant testing (D5.3 Section 3.4, 4.4, 5.4, 6.4).

- Verify scenario coverage to ensure comprehensive testing of the Operational Design Domain (ODD) and the parameter space (D5.3 Section 3.5, 4.5, 5.5, 6.5).

- Check that the scenario concept complies with the requirements (D3.2 Section 4).

- Confirm that the scenario parameters meet the requirements (D3.2 Section 5).

- Validate that the parameter spaces adhere to the requirements (D3.2 Section 6).

- Review application of the subspace creation methodology required to convert logical scenarios (obtained through querying) into concrete test cases for execution:

- Identification of critical parameter regions (D3.4 Section 4.1 and 4.6).

- Structured exploration of the parameter space (D3.4 Section 4.2).

- Focus on performance-sensitive regions (D3.4 Section 4.6).

- Identification of multiple failure conditions (D3.4 Section 4.7).

- Focus on pass/fail boundaries rather than exhaustive parameter coverage (D3.4 Section 4.8).

- Review the test cases generated by the COTSATO process (D3.2 Section 7.5):

- Ensure each test case includes a test scenario, metrics, validation criteria, and pass/fail criteria.

- Depending on the purpose, verify that the metrics cover aspects such as safety, functional performance, HMI, operational performance, reliability, and scenario validation

- Confirm that a clear mapping exists between system requirements and the generated test cases.