Scenario

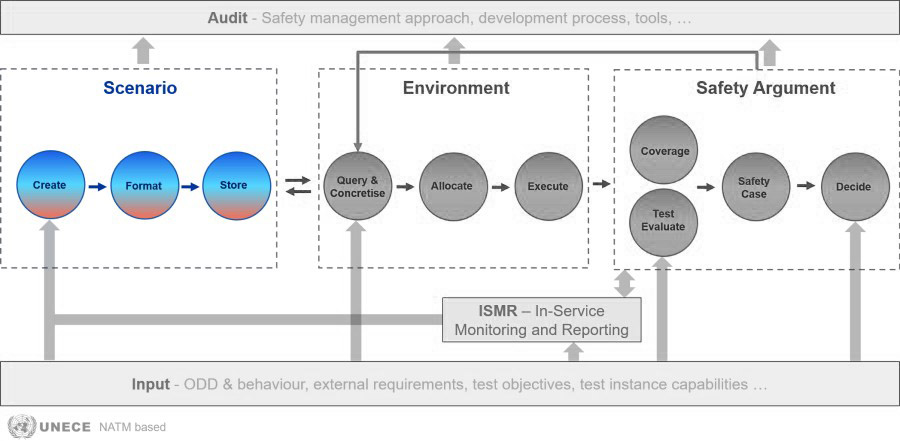

The Scenario block focuses on managing scenarios critical for safety assurance, encompassing three key processes: creation, formatting, and storage.

- Create involves generating scenarios based on data-driven or knowledge-driven approaches. Scenarios are derived using methodologies like StreetWise, which extracts real-world scenarios and statistics from driving data, or database like Safety Pool™, which leverage various scenario generation methods. These processes occur within individual scenario databases (SCDBs), reflecting database-specific requirements and use cases. While scenario creation is handled independently by SCDB providers, it is an integral part of the overarching SAF.

- Format structures scenarios into appropriate representations for effective communication and downstream testing. Multiple levels of abstraction are employed to cater to diverse stakeholders, ranging from human-readable functional and abstract-level scenarios to machine-readable logical and concrete-level scenarios. Common formats like ASAM OpenSCENARIO, OpenDRIVE, and BSI Flex 1889 ensure interoperability and standardization.

- Store consolidates formatted scenarios within SCDBs, enabling seamless access and integration. SUNRISE proposes the SUNRISE Data Framework to link multiple SCDBs, requiring database owners to adhere to shared formats and abstraction levels. This federated approach ensures unified access while maintaining flexibility for individual database management.

Together, these processes enable a structured, standardized, and scalable approach to scenario management for safety assurance of CCAM systems.

SAF Application Guidelines for 'Scenario'

The steps outlined below guide users in applying the Scenario block of the SUNRISE SAF, with a focus on evaluating the structure, quality, and completeness of a scenario database. These steps address the overarching characteristics of the scenario set rather than the detailed content of individual test cases, which are handled within the Environment block. It is important to note that the sub-processes related to scenario creation, formatting, and storage are typically the responsibility of the scenario database provider. As such, this guidance applies to the Scenario block as a whole and does not prescribe stepwise procedures for each sub-block individually.

In the list below, “D” stands for Deliverable. All deliverables of the SUNRISE project can be found here.

- Check standardisation compliance:

- Verify ASAM OpenSCENARIO, OpenDRIVE, and OpenLABEL format compliance (D6.2 Section 3.3 and 1.5.2).

- Confirm RESTful API compatibility for data exchange (D6.3 Section 3.2.3.5).

- Check compliance with SUNRISE ontology standards (D5.2 Section 3.4.2; D6.2 Section 3.2).

- Evaluate quality of scenario set:

- Testing Purpose Metrics: Evaluate scenario relevance for specific test objectives (D5.3 Section 3.1, 4.1, 5.1, 6.1).

- Scenario Description Metrics: Assess completeness and clarity of scenario descriptions (D5.3 Section 3.2, 4.2, 5.2, 6.2).

- Exposure Metrics: Validate real-world representativeness and frequency data (D5.3 Section 3.3, 4.3, 5.3, 6.3).

- (Dis)similarity Metrics: Ensure scenario diversity within the database (D5.3 Section 3.4, 4.4, 5.4, 6.4).

- Coverage Metrics: Analyse parameter space coverage and ODD completeness (D5.3 Section 3.5, 4.5, 5.5, 6.5).

- Evaluate integration into SUNRISE Data Framework (DF):

- Confirm proper connection to DF (D6.3 Section 2.3 and Annex 1).

- Verify authentication and authorisation mechanisms are properly configured for each scenario database (D6.2 Section 2.4, D6.3 Section 3.2.3).

- Verify if DF’s query capabilities work as intended across connected scenario databases (D6.2 Section 2.2.2; D6.3 Section 2.3.1.3 and Annex 2).

- Validate semantic search functionality using harmonised ontologies (D6.2 Section 2.2.2; D5.2 Section 3.4).

- Confirm Automated Query Criteria Generation (AQCG) compatibility (D6.1 Section 3.2.3, D6.3 Section 3.2.5).

- Ensure scenario data exchange follows ASAM OpenSCENARIO, OpenDRIVE, and OpenLABEL standards (D6.2 Section 3.3).

- Validate input/output interface compliance with SUNRISE DF specifications (D6.2 Section 3.3).

- Confirm data format consistency across all connected databases (D6.2 Section 3.3).

- Verify source documentation:

- Check that scenarios in the databases include source information (D3.2 Section 4.2.3).

- Validate that sources are properly documented with data- or expert knowledge origin (D3.2 Section 4.2.3, 8.1.1).

- Check exposure data availability for statistical analysis (D5.3 Section 4.3).

- Evaluate database extension capabilities:

- Verify the database supports extensible parameter lists (D3.2 Section 5.5.2).

- Ensure the database can accommodate new parameters. For example when standards or protocols are updated. (D3.2 Section 5.5.2, 8.2.4).

- Verify that database supports parameter ranges (D5.3 Section 4.2).

- Verify database search and query functionality:

- Confirm ODD-based filtering capabilities (D6.2 Section 2.2.1).

- Verify tag-based searching with standardised taxonomy (D3.2 Section 4.2.2).

- Validate omission-based queries (NOT statements) (D6.2 Section 1.5.2 and 2.2.1).

- Test query reproducibility and result traceability (D6.2 Section 1.5.2).

- Review data accuracy (D5.3 Section 4.6):

- Assess the correctness of data entered into the scenario database.

- Cross-reference with known benchmarks or references.

- Verify that scenarios reflect real-world conditions accurately.

- Review data consistency (D5.3 Section 4.6):

- Check for uniformity across all scenarios, for example in:

- Units of measurement

- Data formats

- Terminologies used

- Standardised naming conventions

- Check for uniformity across all scenarios, for example in:

- Check data freshness (D5.3 Section 4.6):

- Check how up-to-date the scenarios are.

- Verify current relevance of the database content.

- Check last update timestamps.

- Check number of scenarios (D5.3 Section 4.6):

- Count total distinct scenarios available.

- Obtain overview of database comprehensiveness.

- Check scenario quantity metrics.

- Check covered kilometres (D5.3 Section 4.6):

- Check on how many KM’s the scenario database is based.

- Assess span and scale of included scenarios.

- Quantify geographic coverage.

- Verify scenario distribution (D5.3 Section 4.6):

- Analyse breakdown of scenarios by various categories such as:

- Geographic regions

- Road types

- Weather conditions

- Time of day

- Traffic density

- Analyse breakdown of scenarios by various categories such as:

- Verify scenario complexity (D5.3 Section 4.6):

- Assess difficulty levels across scenarios considering:

- Number of vehicles involved

- Presence of pedestrians

- Road complexity

- Environmental conditions

- Assess difficulty levels across scenarios considering: