Allocate

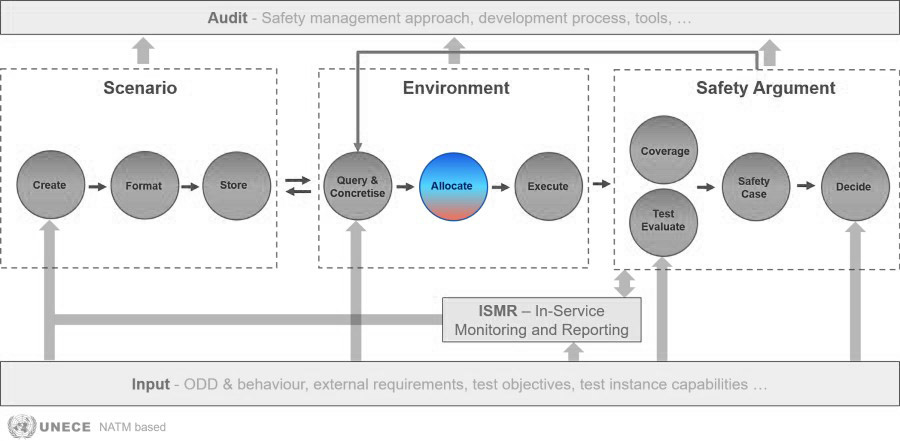

The SUNRISE Safety Assurance Framework is test environment-agnostic. This means it allows scenarios to be executed in a range of test environments. From fully virtual, to hybrid environments (such as Hardware-in-the-Loop) and fully physical environments (such as proving grounds). Within the Allocate block, selected scenarios are allocated to specific test environments. Once a scenario is allocated to a test environment (also called test instance), the scenario is executed and the corresponding data is recorded.

The initial allocation process involves 2 key inputs: test case information and test instance capabilities. Test cases include for example information like scenario descriptions, expected behavior and pass/fail criteria. From this test case information, specific test case requirements are extracted. The second input consists of available test instances and their capabilities. For example virtual simulation, X-in-the-Loop (XiL), and proving ground testing.

An important part of the Allocate block is comparing test case requirements to the test instance capabilities, using a structured approach. This includes analyzing aspects like scenery elements, environmental conditions, dynamic elements and test criteria. Once a suitable test instance is identified, the test case is allocated to it.

A virtual-simulation-first approach is prioritized for efficiency and safety of the people involved. To maximize throughput, test cases suitable for virtual simulation are executed using the lowest-fidelity simulation capable of meeting the test case requirements. After execution, results are reviewed to determine if further testing on higher-fidelity test instances is required. This iterative process may include reallocations to ensure the necessary test coverage and accuracy. This is represented by the arrow from the Safety Argument block to the Query & Concretise block in the figure above.

The allocation process includes provisions for external influence, such as road authorities overriding allocations or proving ground operators refusing tests due to safety concerns. These decisions must be documented for the final assessment. Additionally, the Allocate block includes initial reallocation steps, resulting from the Safety Argument block.

SAF Application Guidelines for 'Allocate'

In the list below, “D” stands for Deliverable. All deliverables of the SUNRISE project can be found here.

- Review the comparison of test case requirements with test instance capabilities:

- Ensure that the structure outlined in D3.3 Section 3 was followed, which includes scenery elements, environment conditions, dynamic elements, and test criteria (D3.3 Section 3.3).

- Verify the consideration of various metrics:

- Check that both functional and non-functional metrics were considered (D3.3 Sections 4.3 and 4.4).

- Confirm that safety was prioritised in the decision-making process (D3.3 Section 4.5).

- Verify that specific requirements of the system under test (SUT) are considered alongside test case requirements (D3.5 Section 4.1.3).

- Check for iterative refinement of both test case requirements and test instance capabilities based on initial allocation outcomes (D3.5 Section 4.2).

- Review the initial allocation process:

- Confirm that the process outlined in D3.3 Section 4.5 and D3.3 Figure 27 was followed.

- Verify that safety standards such as SOTIF were considered in the allocation process, particularly for identifying potentially triggering conditions or functional insufficiencies of the SUT (D3.3 Section 4.5).

- Validate scenario realisation by (D3.5 Section 5.1):

- Verify that allocated scenarios can be meaningfully realised at the semantic level, not just technically executed.

- Confirm that the SUT will encounter intended triggering conditions and respond appropriately according to scenario logic.

- Ensure all relevant behavioural phases can be reached in the allocated test environment.

- Verify if special circumstances resulted in deviations from the general methodology. And if so, ensure they were properly justified (D3.3 Section 4.5).

- Review the re-allocation process (when necessary):

- Ensure that the iterative re-allocation to higher-fidelity test instances, was performed as described in D3.3 Section 4.5.

- Verify that the reasons for re-allocation decisions were properly documented (D3.3 Section 4.6).

- Check if safety confidence evaluation has been performed for scenarios where uncertainty exceeds acceptable false acceptance risk (D3.5 Section 5.2.1).

- Check if selection strategies for higher-fidelity validation have been applied (D3.5 Section 5.2.2). For example through:

- Integration of higher-accuracy vehicle dynamics models

- Adoption of physics-based sensor models

- Implementation of co-simulation frameworks

- Model improvement based on correlation against real-world data

- Check if correlation analysis has been performed to validate alignment between different fidelity levels (D3.5 Section 5.2.3).

- Verify expert evaluation in case the correlation across SAF workflow components reveals discrepancies (D3.5 Section 5.3). For example, check for issues related to:

- Input block – Insufficient ODD or behaviour descriptions.

- Scenario block – Mismatched scenarios and queries.

- Environment block – Allocation or execution problems.

- Technical simulation issues – Sensor models, vehicle dynamics, environment simulation.

- Documentation of correlation root causes (for future allocation improvements) and updates to test instance capability knowledge.

- Examine the documentation of the (re-)allocation results:

- Check for the presence of a tree structure containing all metrics and results of the comparison to all test instances (D3.3 Section 4.6).

- Ensure that all steps of the (re-)allocation process, including reasons for decisions and selection criteria used, were documented and returned to the SAF (D3.3 Section 4.6, 5 and Figure 28).

- Ensure that scenarios that could not be (re-)allocated or were not sufficiently tested are properly flagged and reported to the Coverage block of the Safety Argument component of the SAF (D3.3 Section 4.6 and Figure 28).

- Check for documentation of all safety confidence evaluations and their outcomes (D3.5 Section 5.2.1).

- Check for documentation of correlation results between different fidelity levels (D3.5 Section 5.2.3).

- Check for documentation of scenario realization validation results (D3.5 Section 5.1).

- Check for documentation of iterative refinement of test case requirements and test instance capabilities (D3.5 Section 4.2).

- Review the integration with the SAF workflow:

- Check for existence of feedback mechanisms to receive test execution outcomes from subsequent SAF blocks (D3.5 Section 3 and 5.2).

- Check for existence of refinements of test case requirements and test instance capabilities, resulting from executed test case results (D3.5 Section 4.2).

- Ensure that validated test outcomes contribute to trustworthy safety arguments (D3.5 Section 6).