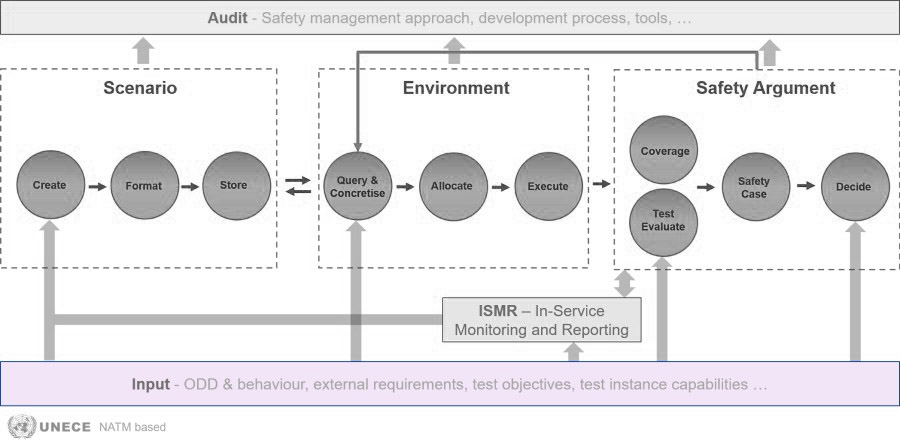

Inputs to the SAF

There are five arrows that input into Safety Assurance Framework (SAF) blocks originating from the Input block. The information covered by the Input block includes items like description of the Operational Design Domain (ODD), external requirements, Cooperative, Connected, Automated Mobility (CCAM) system specifications, variables to be measured during test execution, pass/fail criteria for successful test execution, and monitoring requirements.

At all five arrows, the ODD description of the CCAM system is considered. The ODD description includes ranges of relevant attributes, such as the maximum vehicle speed and rainfall intensity it is able to handle. The ODD description may adopt an inclusive approach (describing what is inside the ODD), an exclusive approach (describing what is outside the ODD), or a combination of the two. It would be highly beneficial if the ODD description is standardized and machine readable. For formatting the ODD, it is suggested to follow the norms related to the ODD definition format listed in ISO 34503 (2023).

The Create block can utilize the ODD description to create scenarios that are part of the described ODD.

The Query & Concretize block uses the ODD description to generate the test cases that are needed for the safety assurance of the CCAM system, including the description of the needed output to analyse the results.

The Test Evaluate block uses the ODD description to determine whether the CCAM system operated safely within its ODD in a specific test.

The Coverage block uses the ODD description to check whether the ODD space is sufficiently covered.

The ISMR block (in-service monitoring and reporting) employs the ODD description in order to verify whether the system is operating inside its ODD.

Besides the ODD description, various blocks also need the external requirements applying to the CCAM system. These requirements should reflect the required behavioural competences, regulations, rules of the road, safety objectives, standards and best practices. The requirements can be a source for creating scenarios, which is why the requirements are considered to be part of the first interface. Furthermore, it is important that the Query & Concretize block considers the requirements and outputs relevant test cases, as the goal of the SAF is to assure that the requirements are met.

Note that this process also establishes the means to measure compliance with the requirements for the tests cases. Not all requirements can be formulated using test validation criteria, which is why the requirements can also be communicated to the Test Evaluate block.

Lastly, the input to the In-Service Monitoring and Reporting (ISMR) block needs requirements to check whether system-level requirements are satisfied over the lifetime of the system. Note that requirements can be very different from system to system, so formalizing this might be challenging. For that reason, a standardized description format would be preferred.

The CCAM system is the main subject of the test cases, thus its technical specifications need to be provided to the Query & Concretize block. The CCAM system can also be a source for creating scenarios. For example, scenarios created using knowledge of the system architecture and fault analysis techniques such as systems-theoretic process analysis (also known as STPA). The CCAM system can be a physical prototype or virtual model of the actual system (or even a combination of both).

In case some variables need to be measured during the test execution – additional to the variables that are needed to verify the test objectives – this information can be provided to the Query & Concretize block.

Additionally, pass/fail criteria for successful test execution have to be provided to the Query & Concretize block, in order to identify when test cases have or have not successfully been executed. For example if there are certain tolerances on speed values or lateral path deviations, these shall be included. When the test case is executed outside of the pass/fail criteria, then its execution would have to be deemed as unsuccessful from an execution point of view. These examples are related to both real world and test track test allocation, but other examples may apply to virtual test environment (for example when simulation output contains an error).

For all inputs, it is assumed that simulation models (other than those representing the CCAM system) and the simulation platform are part of the “Execute” component and, therefore, not provided externally. Hence, simulation models (other than that of the CCAM system) and the simulation platforms are not part of the listed interfaces.