Execute

In the Execute block, the test execution might happen in a virtual, hybrid or physical test environment, depending on the test instance resulting from the Allocate block. The allocated test cases form the input for this block. How the tests are carried out is not explicitly specified by the Safety Assurance Framework (SAF) and is the responsibility of the entity performing the tests. If the Allocate block has been applied correctly, it is guaranteed that the selected test instance is capable of performing the tests. In the case of virtual testing, the SAF recommends a harmonised approach. For that, the SUNRISE project developed a harmonised V&V simulation framework, which can be used for virtual validation of Cooperative Connected Automated Mobility (CCAM) systems but is not mandatory.

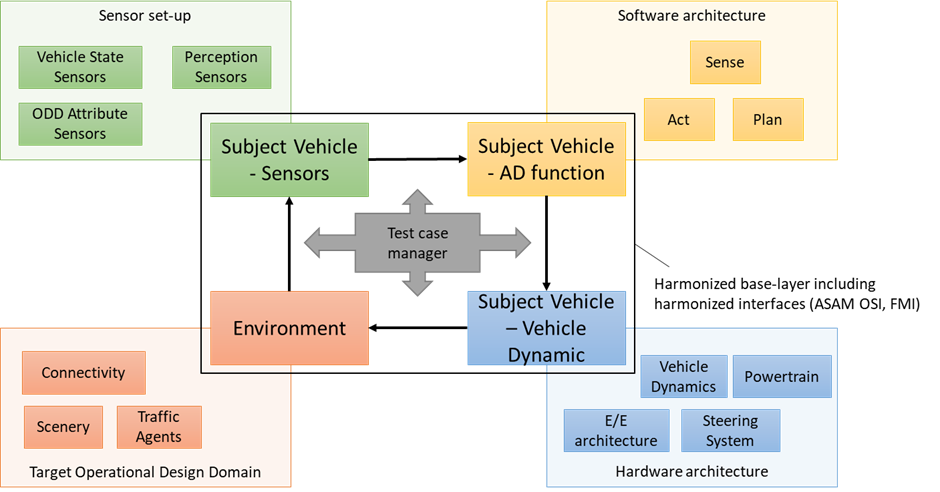

As shown in the figure below, the SUNRISE harmonised V&V simulation framework consists of a so-called base layer consisting of 4 interconnected subsystems, namely:

- Subject Vehicle – Sensors (the sensors installed in the vehicle)

- Subject Vehicle – Automated Driving (AD) function (the behavioural competencies of the vehicle)

- Subject Vehicle – Vehicle Dynamics

- Environment (in which the vehicle operates)

In this approach, the base layer is the core element that can be harmonised, because these 4 subsystems are essential for all simulations. That is the reason why it is possible to use standardised interfaces between these subsystems. The framework can be extended by the user in 4 dedicated dimensions related to the target Operational Design Domain (ODD), the vehicle Sensor set-up, the Software architecture and the Hardware architecture.

SAF Application Guidelines for 'Execute'

In the list below, “D” stands for Deliverable. All deliverables of the SUNRISE project can be found here.

- Verify selection and validation of appropriate testing environments:

- Check the assessment of virtual testing limitations (D4.6 Section 2.3), including:

- Latencies in data exchange between co-simulation models.

- Semantic gaps between real world and simulation (sim2real gap).

- Sensor simulation discrepancies with real sensor behaviour.

- Check the selection of appropriate hybrid or real-world testing methods when serious virtual testing limitations are identified (D4.6 Section 2.4):

- Black Box testing on proving grounds or public roads (D4.6 Section 3.1).

- Vehicle-in-the-Loop testing for AD function validation (D4.6 Section 4).

- Driver-in-the-Loop testing for human-machine interaction scenarios (D4.6 Section 5).

- Check the assessment of virtual testing limitations (D4.6 Section 2.3), including:

- Verify that the simulation framework used, aligns with the Harmonized V&V Simulation Framework (D4.4 Section 4.5), which includes:

- Checking that the base layer contains the four (or less if applicable) core interconnected subsystems:

- Subject Vehicle – Sensors

- Subject Vehicle – AD function

- Subject Vehicle – Vehicle Dynamics

- Environment

- Validate the data formats used, align with recommended standards (D4.4 Section 4.3):

- ASAM OpenSCENARIO for scenario descriptions

- ASAM OpenDRIVE for road networks

- ASAM OpenLABEL for sensor data and scenario tagging

- Confirming the framework uses standardised interfaces between subsystems, particularly ASAM OSI as detailed in D4.4 Section 4.4.

- Checking that the base layer contains the four (or less if applicable) core interconnected subsystems:

- Assess virtual test validation:

- Check that appropriate validation setup has been applied (D4.5 Section 3):

- 1-A.3 for separate subsystem validation

- 1-B.2 for integrated validation

- 1-C.3 for comprehensive system validation

- Verify that correlation analysis between virtual simulation and physical tests was performed (D4.1 Section 3.6, D4.2 Section 8.1 – R1.1_14, D4.5 Section 3).

- Confirm robustness and representativeness of virtual validation framework (D4.2 Section 8.1 – R1.2_10).

- Check that model quality metrics meet defined thresholds (D4.1 Section 3.6, D4.5 Section 3.1).

- Review the simulation model validation test report (D4.1 Section 3.6).

- Check that appropriate validation setup has been applied (D4.5 Section 3):

- Assess hybrid test validation:

- Assess the validation of virtual components (D4.6 section 4.2 and 6):

- Verify all virtual models were validated before use in hybrid testing.

- Check virtual environment validation for realistic representation.

- Confirm calibration processes align virtual inputs with real sensor outputs.

- Check for accounting of noise, latency, and distortion in sensor stimulation.

- For Vehicle-in-the-Loop tests on Proving Grounds (D4.6 Section 4.1):

- Verify real vehicle with real AD function was used.

- Check that virtual traffic agents and sensor models were properly integrated.

- Confirm ETSI-compliant V2X messaging for collective perception testing.

- For Vehicle-in-the-Loop tests on Testbenches (D4.6 Section 4.2):

- Check sensor stimulator calibrations (GNSS, Radar, Camera, LiDAR).

- Verify correlation results.

- Check real-time synchronisation between physical and virtual components.

- For Driver-in-the-Loop on Proving Grounds (D4.6 Section 5.2):

- Verify VR/AR system integration with real vehicle.

- Check optical tracking system with stereo camera pairs.

- For Driver-in-the-Loop in Simulators (D4.6 Section 5.3):

- Verify HMI device integration.

- Assess the validation of virtual components (D4.6 section 4.2 and 6):

- Assess physical test validation:

- Assess Black Box Testing on Proving Grounds or Public Roads (D4.6 Section 3.1):

- Verify system under test (SUT) proper instrumentation of cameras, sensors, and measurement systems.

- Check implementation of two-step validation: 1st test tracks, 2nd public roads.

- Assess Black Box Testing on Proving Grounds or Public Roads (D4.6 Section 3.1):

- Evaluate validation metrics and Key Performance Indicators (KPIs):

- Verify that requirements from protocols, standards and regulations were used where applicable. For example, from Euro NCAP, GSR or 1958 agreement (D4.2 Section 8.1 requirement R1.1_01, R1.2_01 and Section 8.1 requirement R3.1_01).

- Check that SOTIF requirements were addressed (D4.2 Section 4.1), including:

- Risk quantification for scenarios, triggering conditions and ODD boundaries.

- Validation results for known unsafe scenarios.

- Validation results for discovered unknown unsafe scenarios.

- Assessment of residual risk.

- Check test case execution results:

- Check that all executed test cases generated desired results.

- Confirm test coverage metrics have been generated. For example:

- Check EURO NCAP and GSR compliance metrics (D4.2 Section 8.1 – R1.1_01, R1.2_01, R3.1_01).

- Verify sensor validation metrics were applied (D4.2 Section 8.1 – R1.1_02).

- Review correlation coefficients between simulation and physical test results (D4.2 Section 8.2 – R2.1_49).

- Confirm that test results include both virtual and physical validation data where applicable (D4.2 Section 8.1 – R1.1_14).

- Verify that executed simulations correspond to the requests from scenario manager (D4.2 Section 8.1 – R1.1_25).

- Verify documentation related to test execution:

- Check for documentation of test results including key outcomes and values of metrics and KPIs, and ensuring that validated test outcomes contribute to trustworthy safety arguments (D3.5 Section 6).

- Check for documentation of the applied validation methodology and metrics, including the decision-making process (D4.5 Section 3 Introduction and 3.1).

- Check for documentation of values of correlation quality metrics (D4.5 Section 3.1).

- Check for documentation of toolchain functionality assessment results for repeatability, uncertainty, performance, and usability (D4.5 Sections 3.7-3.9).

- Check for documentation on matching use case requirements to validation setup (D4.5 Section 4).