Test Evaluate

The Test Evaluate block assesses the results of each test execution to determine whether the CCAM system has passed or failed. For example, if the system stayed within its speed limits, or if it successfully avoided a collision. Exact pass/fail values are not provided by the SUNRISE SAF, because various external factors influence those values. However, the following guidelines assist SAF users in taking pass/fail decisions:

- Whether the intended test has been executed. For example whether intended cut-in has occurred.

- Whether test outcomes contain anomalies. For example simulation errors.

- Whether use case specific pass/fail criteria received from the input layer, have been addressed correctly. For example if a speed sign has been correctly detected and respected.

- Whether a comparison to a safety performance baseline has been correctly performed. For example a comparison to a human driver reference model.

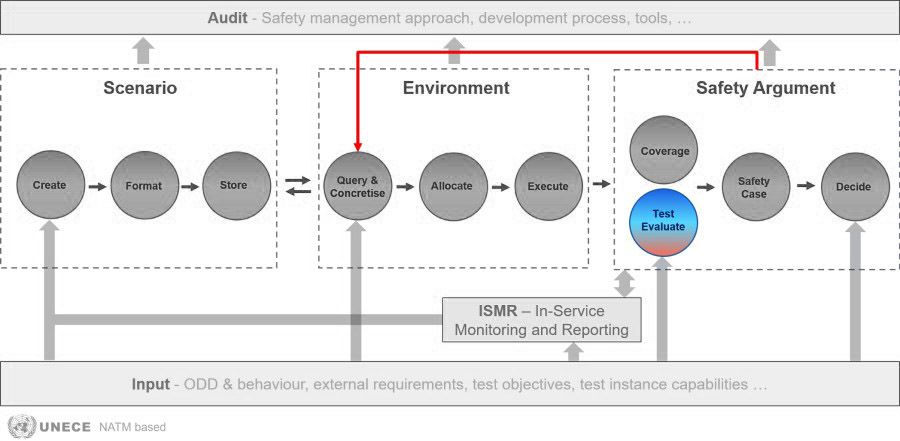

Depending on the analysis outcomes, the Test Evaluate block might trigger an iterative process that derives new concrete scenarios within logical scenario parameter ranges to identify failure conditions (for example parameter combinations leading to system failure). This feedback loop for continuous testing improvement, is indicated by the red arrow in the image above.

The Test Evaluate block ultimately provides essential input to the Safety Argument block, which combines information from the Coverage block with that of the Test Evaluate block, to generate safety evidence for the CCAM system under test.

SAF Application Guidelines for 'Test Evaluate'

In the list below, “D” stands for Deliverable. All deliverables of the SUNRISE project can be found here.

- Verify test run validation including proper application of test run validation metrics, to ensure that (D3.5 Section 5.2):

- The test execution was valid and meaningful

- The correct test instance was used for the scenario

- Test scenario importance was properly evaluated

- Critical scenarios were appropriately prioritised

- Assess scenario realisation to verify that the scenario was meaningfully executed, by checking that (D3.5 Section 5.1):

- The CCAM system actually encountered the intended triggering conditions

- The CCAM system responded appropriately to the scenario’s defined logic

- The scenario behaviour phases that test the intended function, were reached

- Any scenarios not properly realised were flagged as “not achieved”

- Evaluate safety confidence metrics by verifying that (D3.5 Section 5.2.1):

- An acceptable false acceptance risk was properly defined

- Uncertainty estimation at the test point was established

- Uncertainty was compared against false acceptance risk thresholds

- Sufficient confidence margins were maintained

- In case higher fidelity testing was performed, review the correlation analysis by checking that (D3.5 Section 5.2.3):

- Correlation between low and high fidelity test instances was examined

- Appropriate correlation methods were used (like the Pearson correlation coefficient)

- Statistical significance was verified (low p-value)

- Acceptable correlation levels were predefined before testing

- Validate expert analysis documentation by ensuring it includes (D3.5 Section 5.3):

- Root cause analysis for correlation discrepancies

- Assessment of re-allocation needs to different test instances

- Documentation of further investigations when required

- Expert explanations for anomalies or unexpected results